How to Build and Host a Full-Stack App on the Edge For Free

Published on: 10/3/2025

Free web hosting is not limited to the static web pages. One can host a full-stack app for free on the edge (i.e. close to the user) using Cloudflare workers. The following is the guide on:

How to create and host for free a chat bot app using Cloudflare workers

I chose AI chat bot as an example for this tutorial not only because that is what I like to do most but also because this app requires a backend to enforce usage limits and to hide your API keys (the passwords to interact with code/models remotely) that are needed to interact with a third party language model. In addition, many API providers discourage or disallow client-side only calls.

For our example I will use Groq API to ineract with a LlaMA-3.3 70B model - it is very fast and it is free to use with generous (1K per day) usage limits.

What we set out to build.

A small, fast and free AI assistant website that:

a) uses Claudflare pages for chat UI (just as we did before for static web pages);

b) talks to a tiny edge API (a Cloudflare Worker). To let the browser call the API safely without a user account, we introduced a short-lived token (JWT);

c) streams model replies live from Groq (LLaMA-3.3 70B). It is free with up to 1K requests per day;

d) finally, it enforces a daily message limit for each visitor on the client side (we will implement the backend usage limits later in another blog post).

No user registartion, no accounts. No server to manage. Everything on Cloudflare’s “edge” (their global network). Yet it is reasonably secure.

App architechture

UI on Cloudflare Pages, API on a Cloudflare Worker.

Pages (frontend): hosts the HTML/CSS/vanilla JS. It’s a static site, so it’s cheap (i.e. free for us on the free plan), globally cached, and fast to load.

Worker (backend): a tiny edge server that only wakes up for API calls. This separation keeps page loads off the Worker (saves quota) and lets us secure the API more tightly.

Prerequisites

Let's start by covering the bases.

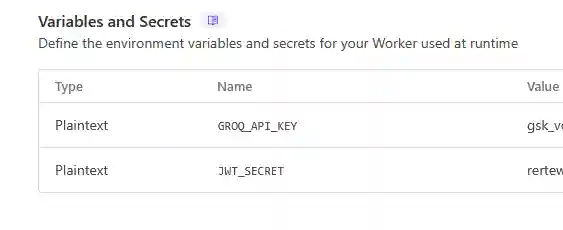

I) To build this app I will use Groq as a provider for an open-source model Llama-3.3 70B. Groq uses their own chips for model inference with a very high speed. Groq provides 1000 requests per day for free. Sign up to Groq here and create an API key. Call the key GROQ_API_KEY and keep it secret - it is your password for interacting with Groq models.

II) To protect from unathorized programmatic access to your app we would employ JSON Web Token (JWT) . You would need to come up with 32+ characters random string and call it JWT_SECRET

Front end for chat app

We will be creating the static pages on CF infra just as we did earlier.

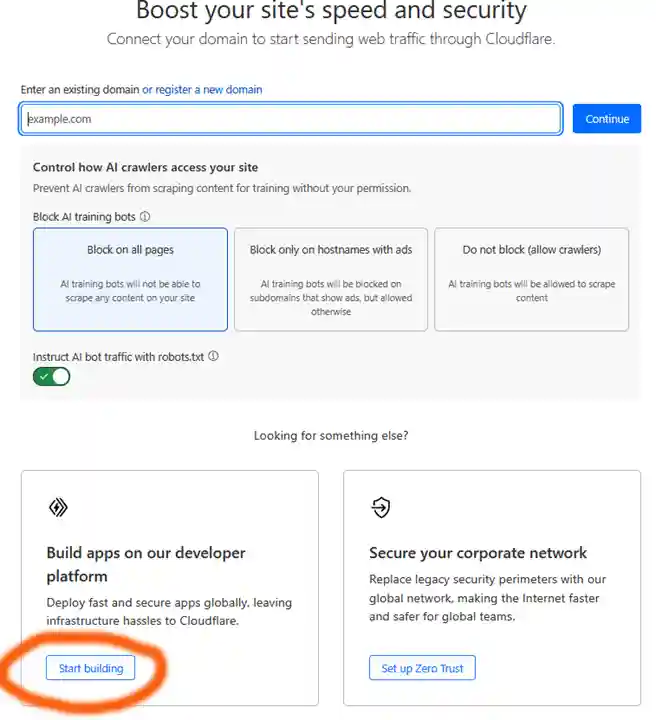

1) Login into your CF account and pay attention to the sidebar or if it is your first time on the platform you will be greeted with a welcome board:

Chose "Start building" then "Create application"

2) In sidebar select: Compute (Workers) -> Workers & Pages -> Click "Create application" button

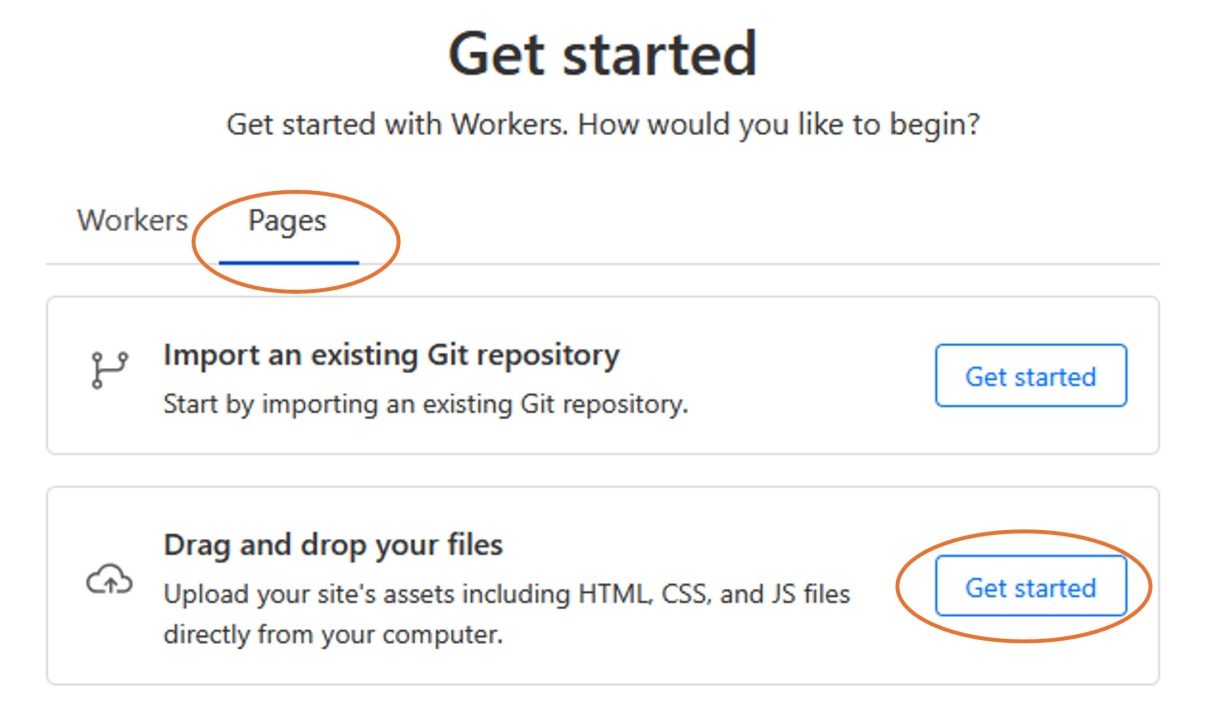

3) Stay on the "Pages" tab and chose "Drag and drop your files"

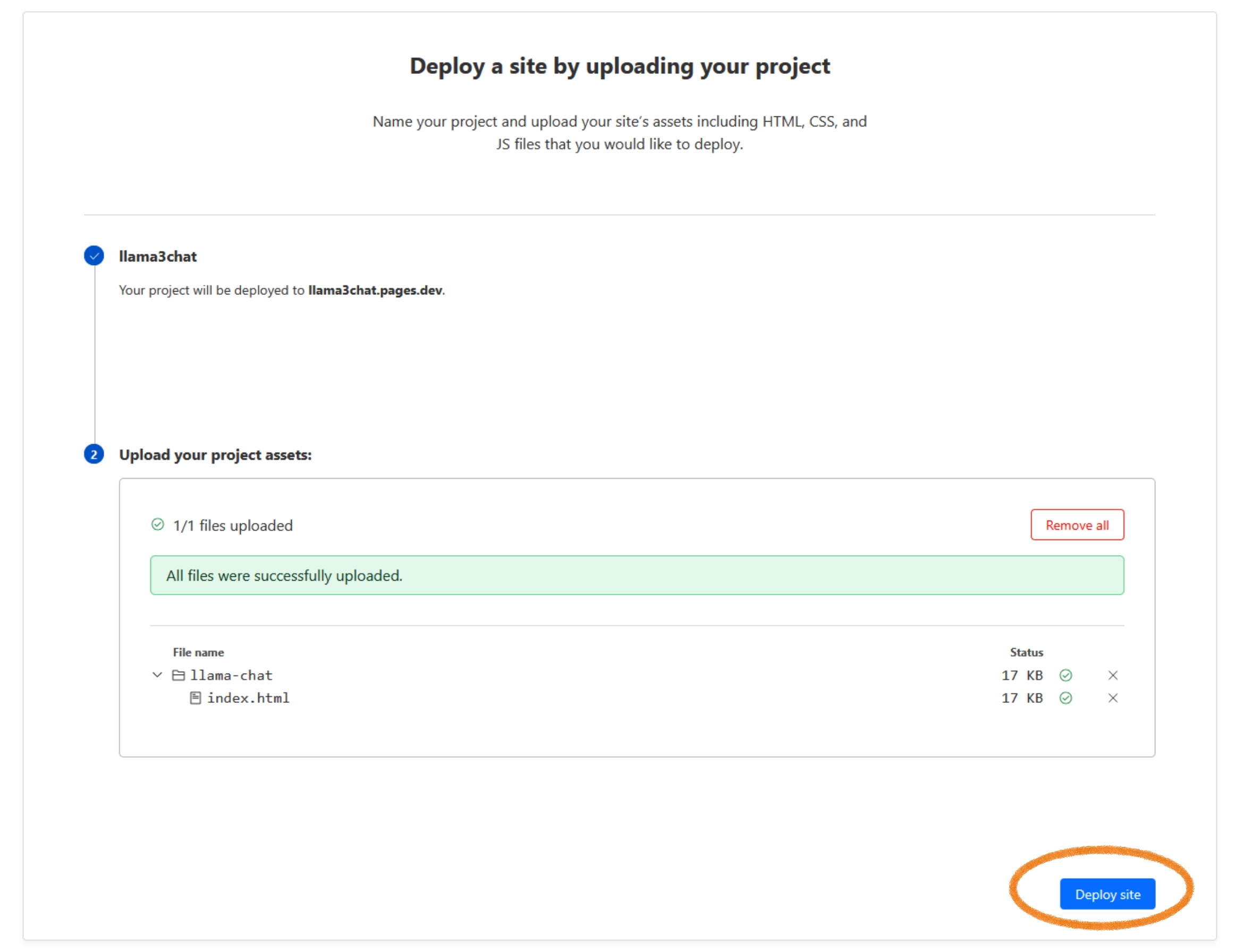

4) Give your project a unique name. I will call mine llama3chat it will be then hosted at https://llama3chat.pages.dev

5) Now you need a file with frontend. Download it from my Github repo: https://github.com/ZeroCostStartup/llama-chat/blob/main/index.html. Zip it or place in folder. The folder name does not matter.

6) Upload the file (folder) and deploy the pages

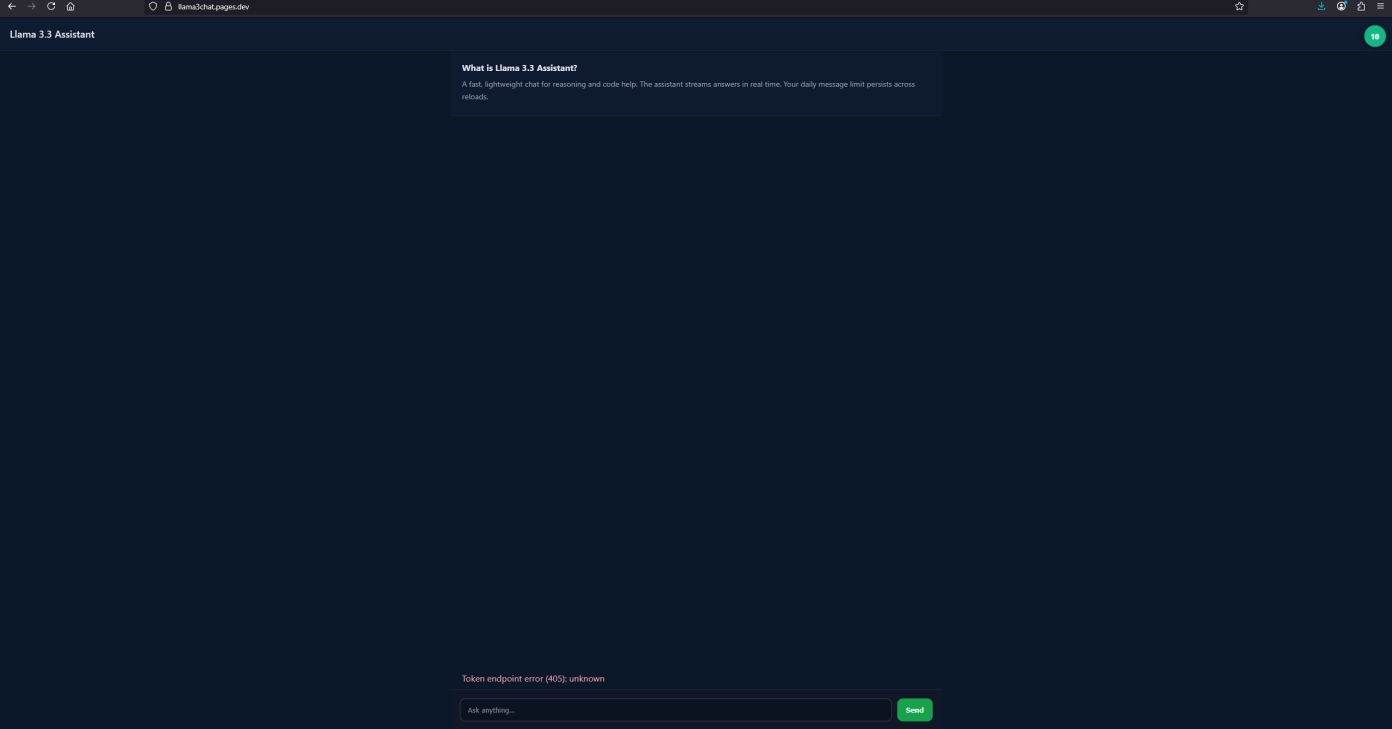

The pages are now deployed at https://your-project-name.pages.dev and would like like the following:

Of course the app would not work without backend so let's create one.

CF Worker as a backend

We now create a worker for our app's backend. The initial steps are similar to creating a static web site on CF infra.

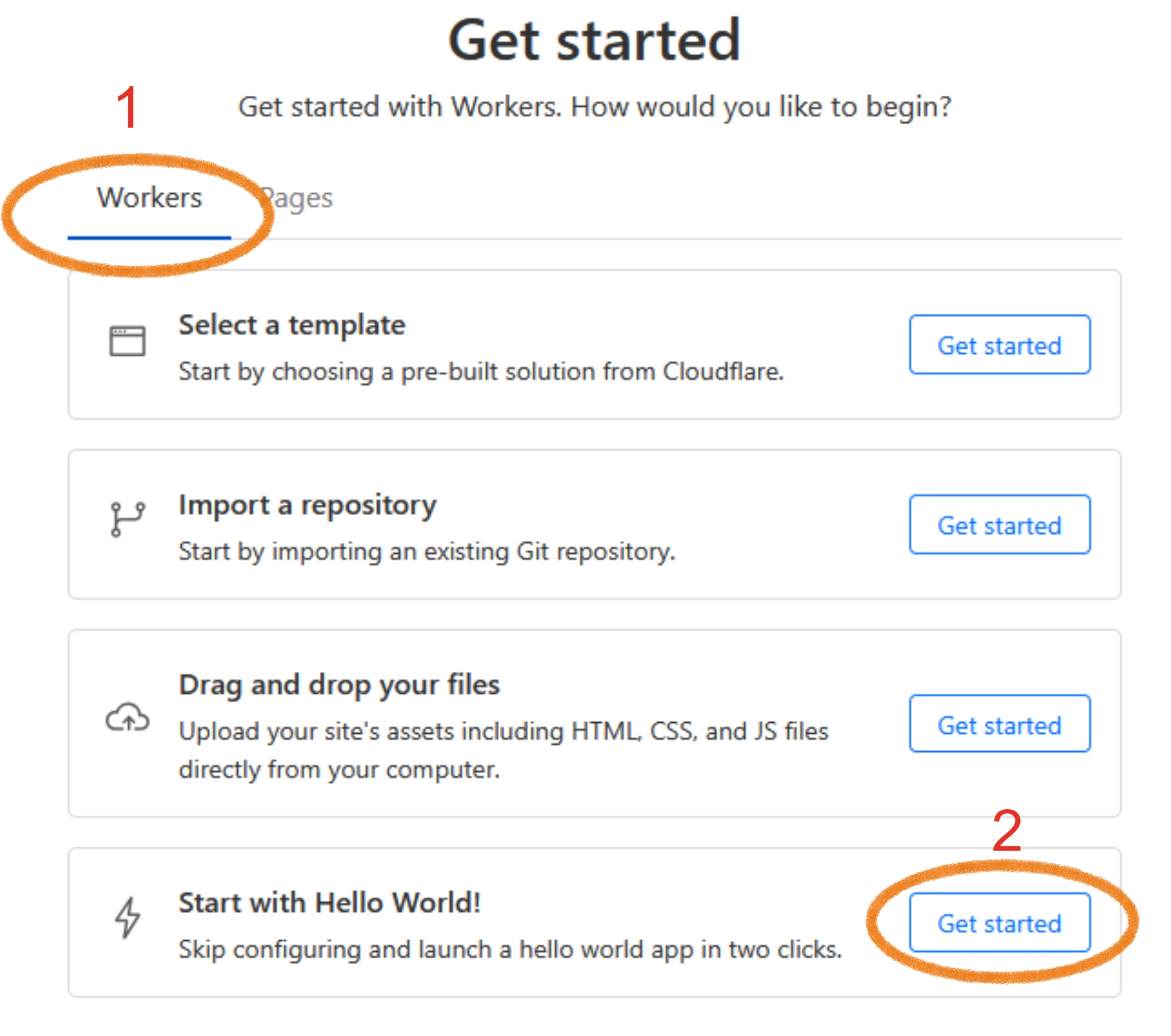

Select: Compute (Workers) -> Workers & Pages -> Click "Create application" button but this time we stay on the "Workers" tab:

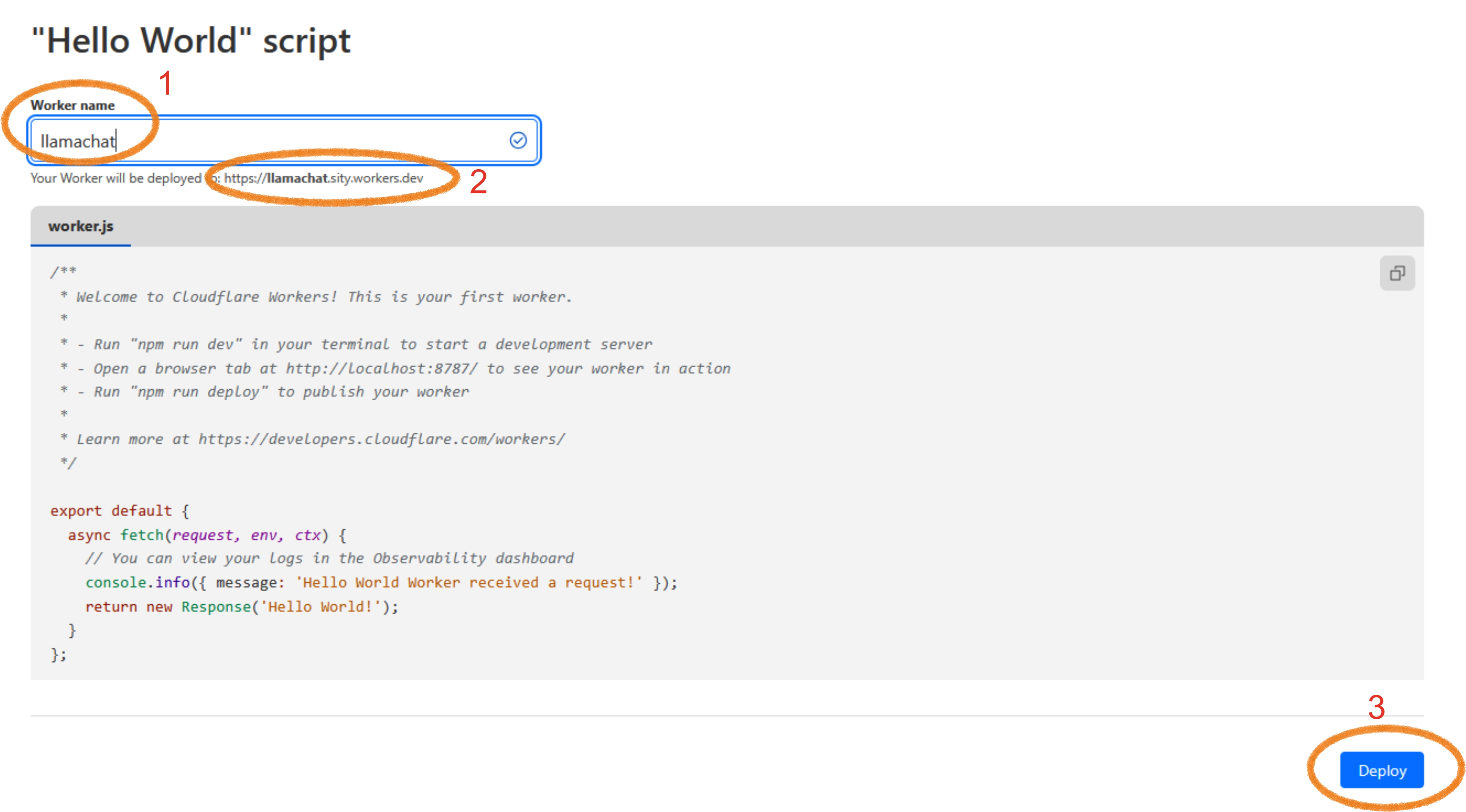

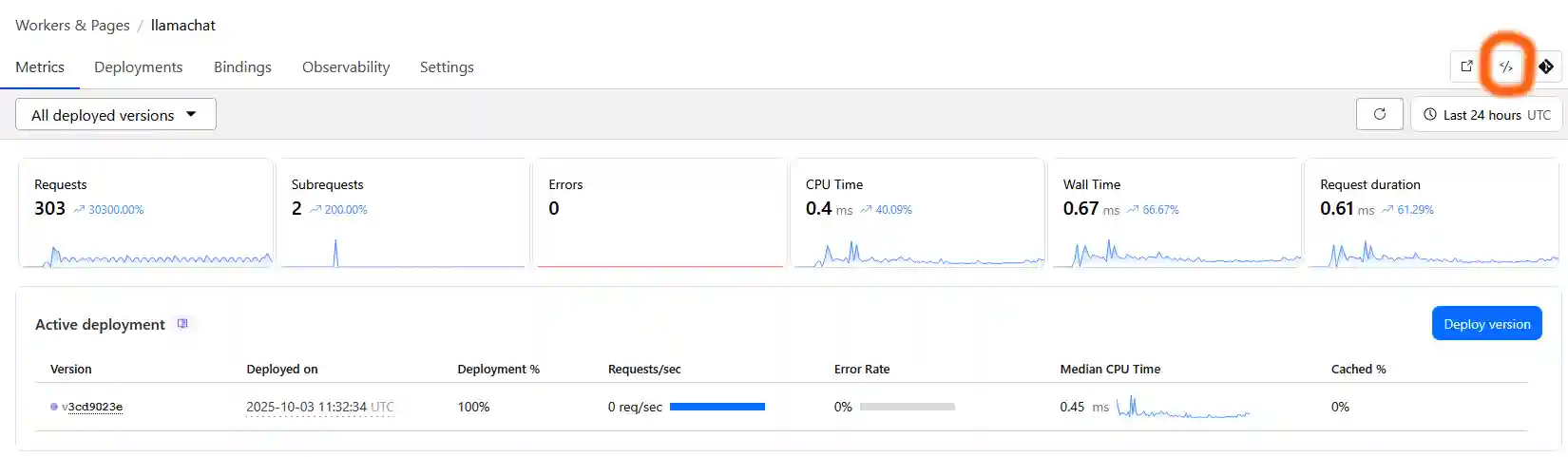

And then continue with "Start with Hello World!". Call your new worker e.g. llamachat:

Pay attention to your worker's URL. It is your-project-name.your-user-name.pages.dev. See above #2. Wrtie it down. Click "Deploy"

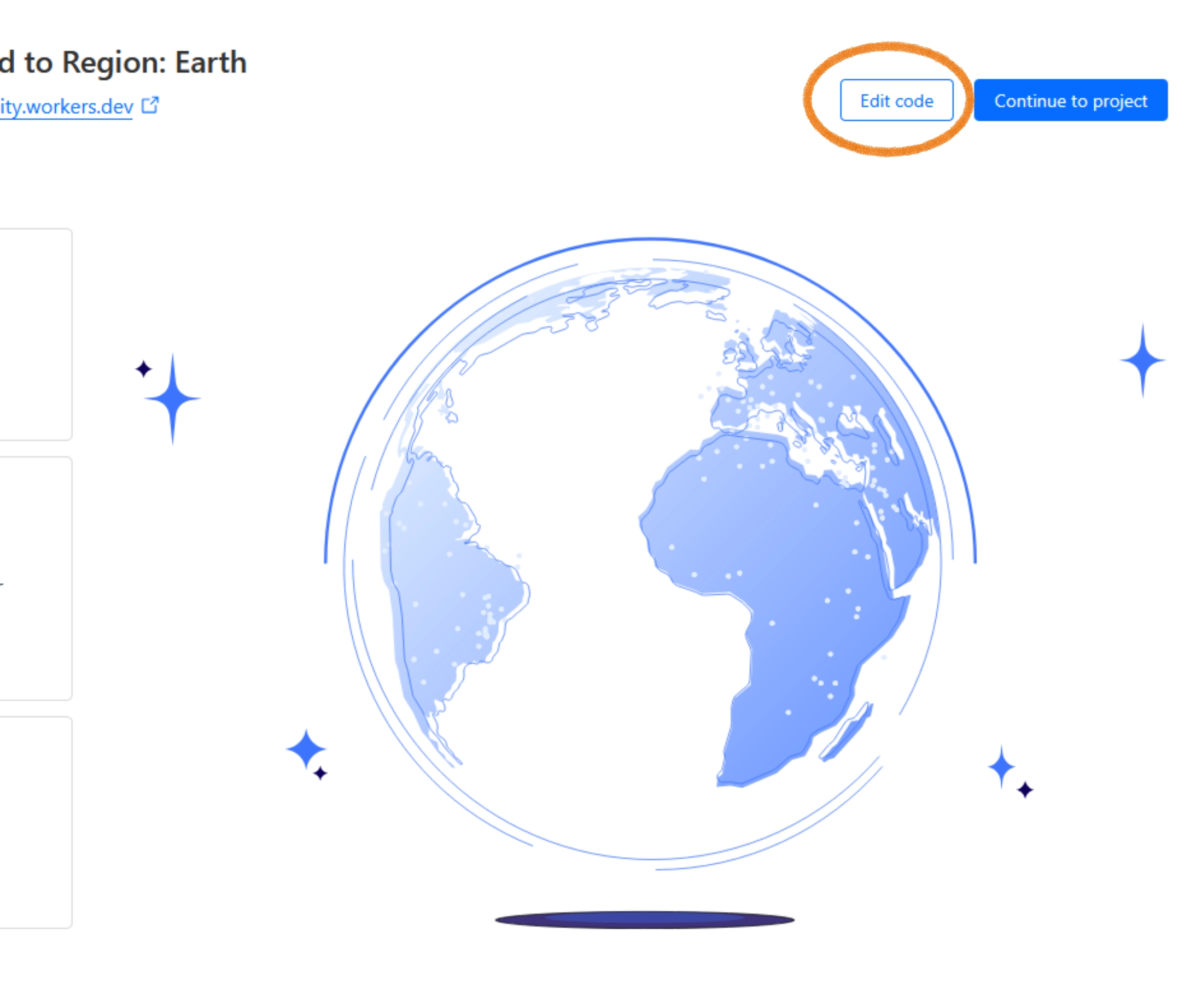

After that you would be redirected to the following page:

Your worker-based website is now live at worker address but it is not too exciting in its current form - it just displays "Hello world!", nothing else.

IMPORTANT! It is now a good idea to go back to your index.html file and replace const API_BASE with your worker address (const API_BASE = "https://your-project-name.your-user-name.pages.dev"; )

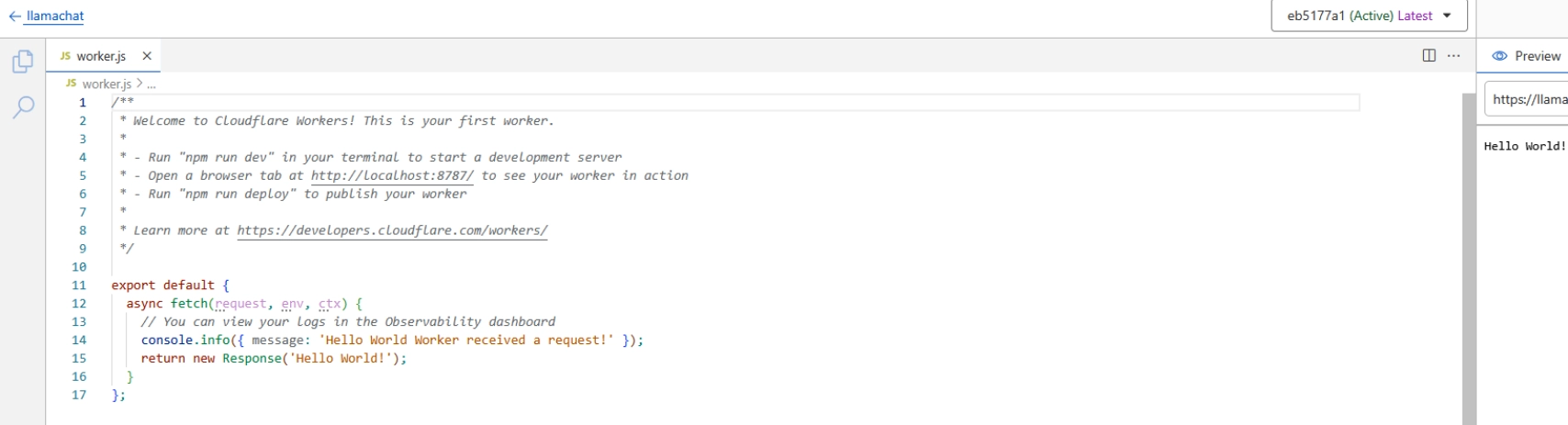

Now we need to make a real backend for our app. Go ahead and copy the wrokers.js from my repo: https://github.com/ZeroCostStartup/llama-chat/blob/main/worker.js

Now click on "Edit code" button on the page you were redirected after "Hello world!" worker deployment. Replace the exisiting worker js code with the one you copied from my repo

IMPORTANT! Change const PAGES_ORIGIN to "https://your-project-name.pages.dev" in the worker.js editor above (const PAGES_ORIGIN = "https://your-project-name.pages.dev"; //avoid using trailing slash in URL).

When you later would want to edit your worker code you can find the edit code button in the upper right corner of your worker page:

FInally add your environmental variables ( GROQ_API_KEY and JWT token) to your worker "Settings" -> "Variables and Secrets":

The Llama chat bot should be fully functional now.

Please note that despite we built in some of the anti-abuse techniques it is still relatively easy to overcome those guardrails. For example the message number limit is currently set at 10 per user per day but that is primarily enforced on the client side. The user can simply clear the localStorage and start a new session. I will later explain how to limit the number of user messages by user IP using D1 database or the Durable Object storage.